The Pew Research center recently published a research paper on respondent focus and data quality. The synopsis of the research paper states:

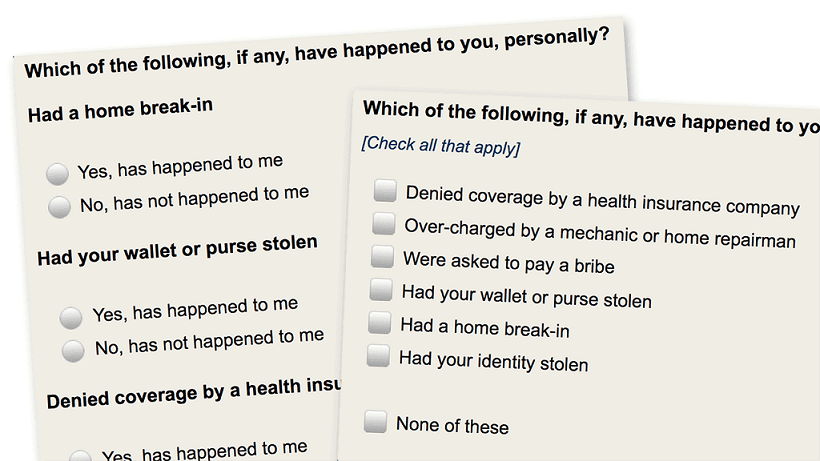

“Forced-choice questions yield more accurate data than select-all-that-apply lists”

What does this mean?

The research by Pew indicated that folks are not paying attention to multiple-choice questions – in jogging their memory and cognitive ability to recall and respond – when asked with many decisions and choices at a single time. However, when presented with the same set of choices – one by one – they are more descriptive and elucidate more.

This is amazing insights – but this also has a downside effect. Now a single multiple-choice question with 10 options has to be transformed to 10 separate (yes/no) questions. We’ve just simply expanded the survey from a single question to 10 questions. We’ve now expanded the time taken – and potentially the fatigue associated with lengthier surveys.

Two sides of the same coin

The Pew study illustrates a key decision – that researchers are faced with every day. Respondent fatigue vs. depth of data. This is a balance that we all face and draw upon our judgment daily. We can get more accurate data – if we expand the model for data collection with a “Focus” model – where each element if measured individually – however that typically results in increasing the survey length.

Our Solution – You can kill two birds with one stone

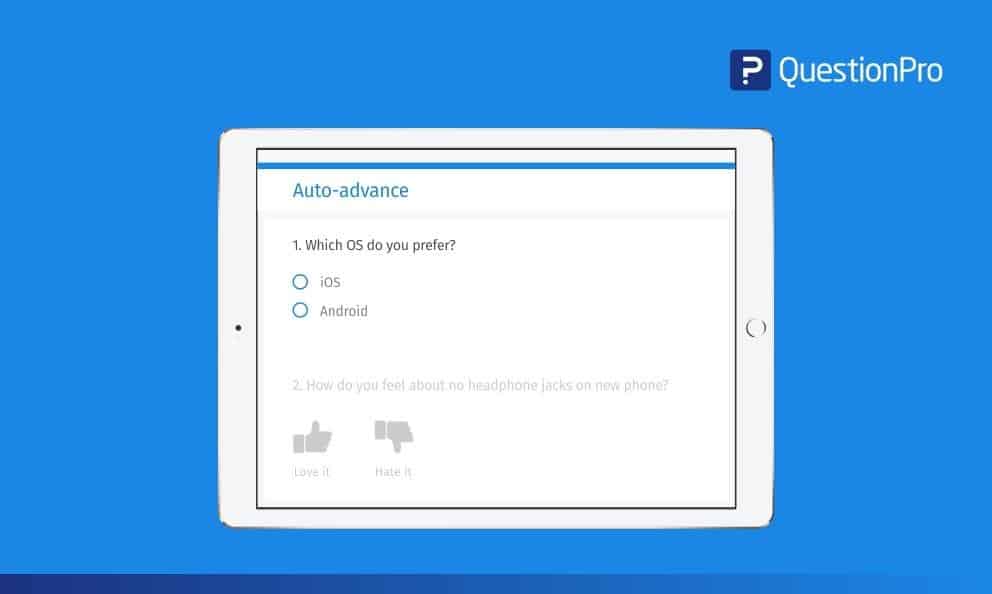

With the release and adoption of our Auto-Advance – feature, we’ve made it easier for respondents to go through multiple questions – nearly at the same speed – with which they “choose” options.

How does it work?

When “Auto-Advance” is enabled – as users click on options, the next question automatically appears in the view-port – the primary surface area for the respondents where they are paying attention.

Users don’t have to scroll manually to go from one question to the other. The act of clicking on a choice in a survey automatically triggers the scrolling to the next question in the correct position.

When auto-advance is enabled, complex matrix questions are automatically broken down into individual questions with a single click + scroll model.

Research on Research – Does this work?

Yes – and let me walk you through the model we used to validate our hypothesis.

We ran a general consumer study – with about 30 data points – 10 multiple choice questions + 2 Matrix/Grids with 5 items each on a Likert scale and a final open-ended question.

The median time to take a survey – amongst a sample of 600 participants was about 460 seconds – about 8 Mins. This was our control group.

We ran the same survey, with another test group – but this time, Auto-Advance was enabled. The median time to take the survey went down to 300 seconds – about 5 mins. This was almost 80% increased efficiency.

Apart from decreasing the time taken to complete the survey we found 3 major tangential wins for this methodology;

- More data captured – Respondents skipped a significantly LESS number of questions than the control group.

- Respondent Delight – At the end of both the surveys, we asked respondents to rate the survey experience itself. The Test group had a significantly higher rating on their “Delight

Factor” – about the survey experience itself. - Increased Focus – This is part of (a) above – that the test group was skipping LESS of the questions and also corroborates the research done by the Pew Research Center – where they concluded that “Forced-choice questions yield more accurate data than select-all-that-apply lists”

Conclusion

With Auto-Advance, we believe and can prove that the issues around data quality as well as respondent fatigue are addressed in a balanced and meaningful way. This allows researchers to create delight with the respondents – and thereby securing the implicit data time that survey respondents are willing to spend and be thoughtful about their responses.